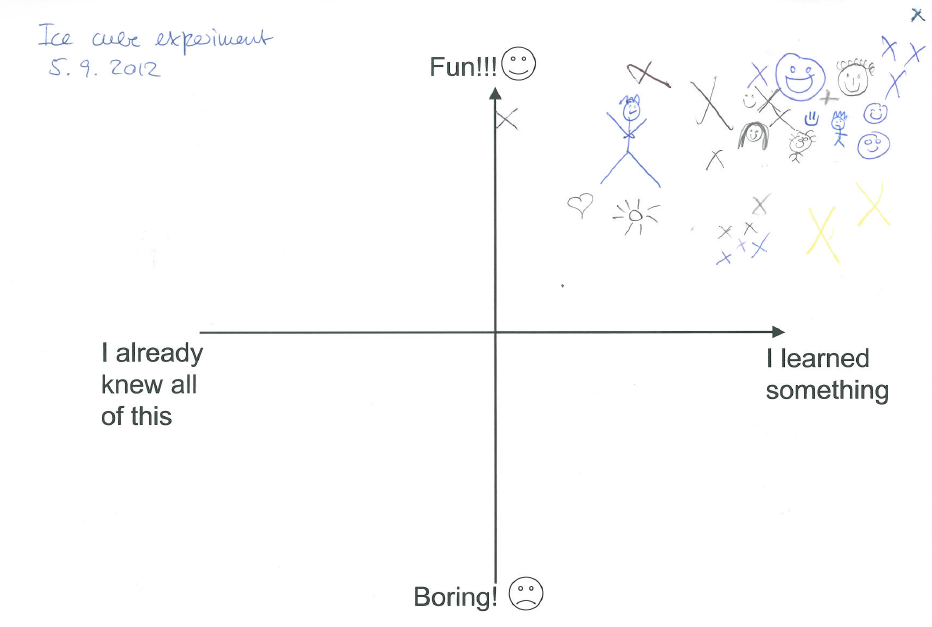

Different ways of posing questions for concept tests are being presented here

Concept tests using voting cards have been presented in this post. Here, I want to talk about different types of questions that one could imagine using for this method.

1) Classical multiple choice

In the classical multiple choice version, for each question four different answers are given, only one of which is correct. This is the tried and tested method that is often pretty boring.

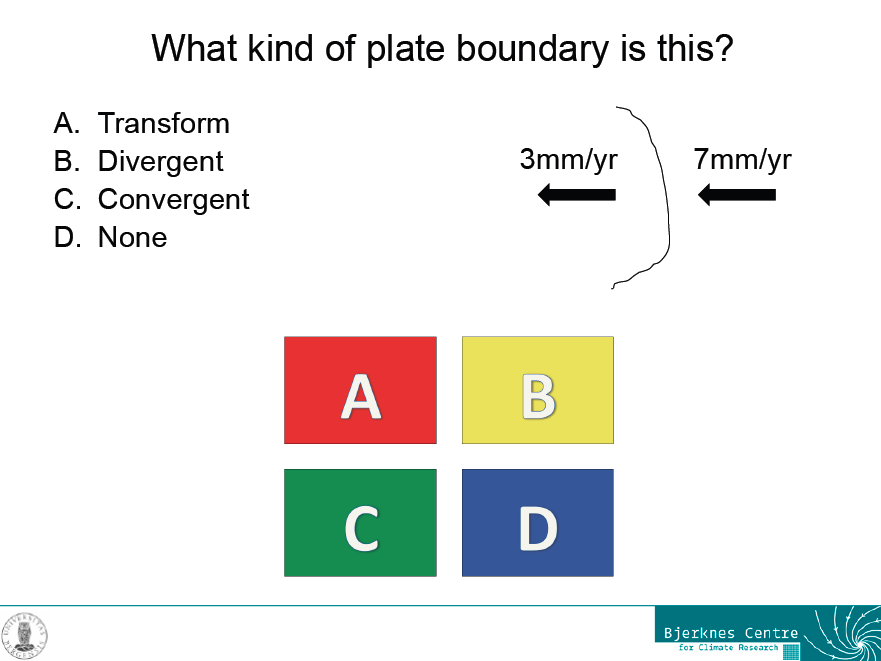

An example slide for a question with one correct answer

However, even this kind of question can lead to good discussions, for example when it is introducing a new concept rather than just testing an old one. In this case, we had talked about different kinds of plate boundaries during the lecture, but not about the frame of reference in which the movement of plates is described. So what seemed to be a really confusing question at first was used to initiate a discussion that went into a lot more depth than either the textbook or the lecture, simply because students kept asking questions.

2) Several correct answers

A twist on the classical multiple choice is a question for which more than one correct answer are given without explicitly mentioning that fact in the question. In a way, this is tricking the students a bit, because they are used to there being only one correct answer. For that reason they are used to not even reading all the answers if they have come across one that they know is correct. Giving several correct answers is a good way of initiating a discussion in class if different people chose different answers and are sure that their answers are correct. Students who have already gained some experience with the method often have the confidence to speak up during the “voting” and say they think that more than one answer is correct.

3) No correct answer

This is a bit mean, I know. But again, the point of doing these concept tests is not that the students name one correct answer, but that they have thought about a concept enough to be able to answer questions about the topic correctly, and sometimes that includes having the confidence to say that all answers are wrong. And it seems to be very satisfying to students when they can argue that none of the answers that the instructor suggested were correct! Even better when they can propose a correct answer themselves.

4) Problems that aren’t well posed

This is my favorite type of question that usually leads to the best discussions. Not only do students have to figure out that the question isn’t well posed, but additionally we can now discuss which information is missing in order to answer the question. Then we can answer the questions for different sets of variables.

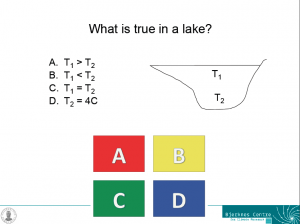

One example slide for a problem that isn’t well posed – each of the answers could be correct under certain conditions, but we do not have enough information to answer the question.

For example for the question in the figure above, each of the answers could be correct during certain times of the year. During summer, the temperature near the surface is likely to be higher than that near the bottom of the lake (A). During winter, the opposite is likely the case (B). During short times of the year it is even possible that the temperature of the lake is homogeneous (C). And, since the density maximum of fresh water occurs at 4degC, the bottom temperature of a lake is often, but not inevitably, 4degC (D). If students can discuss this, chances are pretty high that they have understood the density maximum in freshwater and its influence on the temperature stratification in lakes.

5) Answers that are correct but don’t match the question.

This is a tricky one. If the answers are correct in themselves but don’t match the question, it sometimes takes a lot of discussing until everybody agrees that it doesn’t matter how correct a statement is in itself; if it isn’t addressing the point in question, it is not a valid answer. This can now be used to find valid answers to the question, or valid questions to the provided answers, or both.

This is post no 2 in a series of 3. Post no 1 introduced the method to the readers of this blog, post no 3 is about how to introduce the methods to the students you are working with.