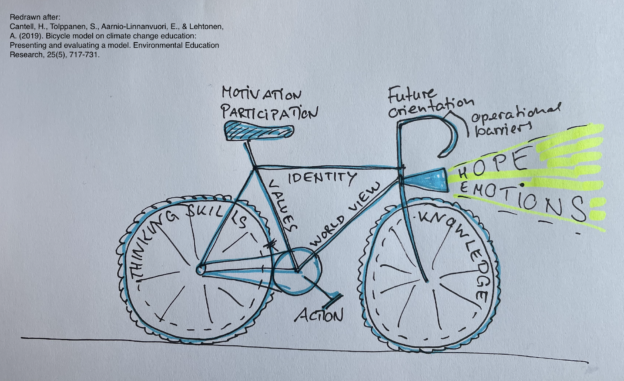

Over the last couple of weeks, I’ve talked to many people that are in one way or other involved in teaching about sustainability at high school or university level. One thing that has struck me is how many seem to be teaching about sustainability without actually believing that we can and will “fix” the big issues like climate, biodiversity, hunger, wars. And while I don’t have a solution to them either, I found it so disheartening to see all these teachers that talk to so many young people and that seem to have no hope for the future. Surely this cannot be the way to do things. If they don’t see the point of changing things because we are all doomed anyway, how will they support their students to develop skills and strategies to deal with all the big challenges they will be faced with?

This is where the article I’m summing up below comes in:

“Hope dies, action begins?” The role of hope for proactive sustainability engagement among university students. (Vandaele & Stålhammar, 2022)

Continue reading →