My new Twitter friend Kirsty, my old GFI-friend Kjersti and I have been discussing teaching in laboratories. Kirsty recommended an article (well, she did recommend many, but one that I’ve read and since been thinking about) by Buck et al. (2008) on “Characterizing the level of inquiry in the undergraduate laboratory”.

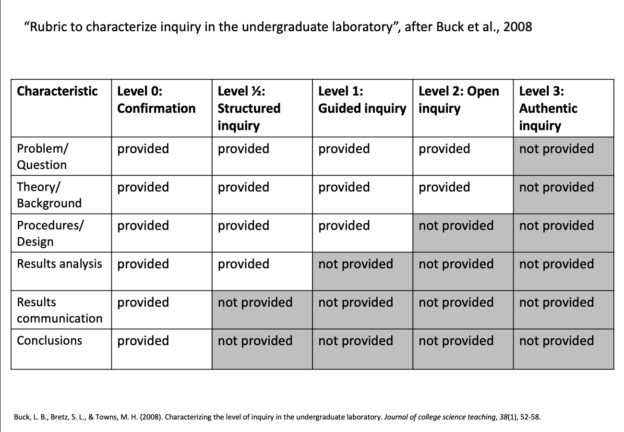

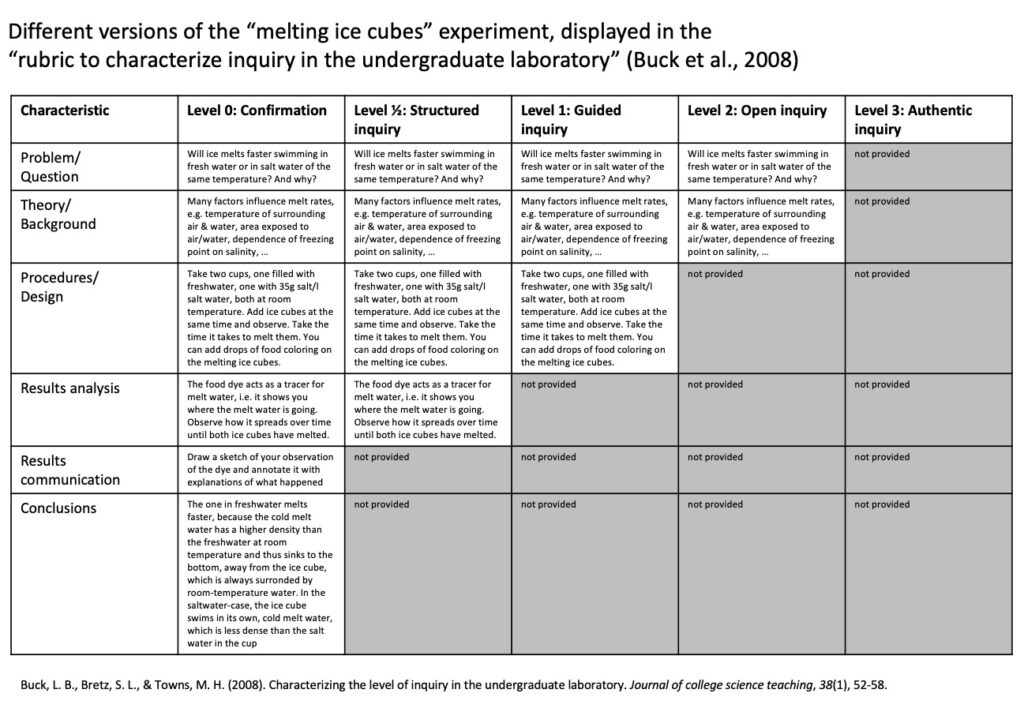

In the article, they present a rubric that I found intriguing: It consists of six different phases of laboratory work, and then assigns 5 levels ranging from a “confirmation” experiment to “authentic inquiry”, depending on whether or not instruction is giving for the different phases. The “confirmation” level, for example, prescribes everything: The problem or question, the theoretical background, which procedures or experimental designs to use, how the results are to be analysed, how the results are to be communicated, and what the conclusions of the experiment should be. For an open inquiry, only the question and theory are provided, and for authentic inquiry, all choices are left to the student.

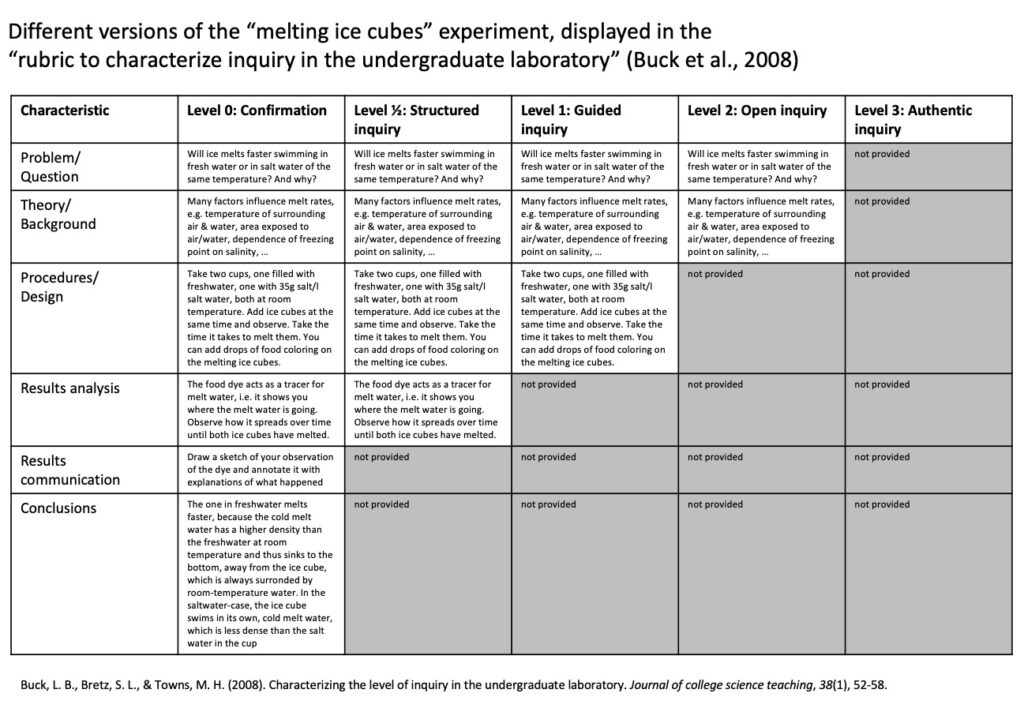

The rubric is intended as a tool to classify existing experiments rather than designing new ones or modifying existing, but because that’s my favourite way to think things through, I tried plugging my favourite “melting ice cubes” experiment into the rubric. Had I thought about it a little longer before doing that, I might have noticed that I would only be copying fewer and fewer cells from the left going to the right, but even though it sounds like a silly thing to do in retrospect, it was actually still helpful to go through the exercise.

It also made me realize the implications of Kirsty’s heads-up regarding the rubric: “it assumes independence at early stages cannot be provided without independence at later stages”. Which is obviously a big limitation; one can think of many other ways to use experiments where things like how results are communicated, or even the conclusion, are provided, while earlier steps are left open for the student to decide. Also providing guidance on how to analyse results without prescribing the experimental design might be really interesting! So while I was super excited at first to use this rubric to povide an overview over all the different ways labs can possibly be structured, it is clearly not comprehensive. And a better idea than making a comprehensive rubric would probably be to really think about why instruction for any of phases should or should not be provided. A little less cook-book, a little more thought here, too! But still a helpful framework to spark thoughts and conversations.

Also, my way of going from one level to the next by simply withholding instruction and information is not the best way to go about (even though I think it works ok in this case). As the “melting ice cubes” experiment shows unexpected results, it usually organically leads into open inquiry as people tend to start asking “what would happen if…?” questions, which I then encourage them to pursue (but this usually only happens in a second step, after they have already run the experiment “my way” first). This relates well to “secret objectives” (Bartlett and Dunnett, 2019), where a discrepancy appears between what students expect based on previous information and what they then observe in reality (for example in the “melting ice cube” case, students expect to observe one process and find out that another one dominates), and where many jumping-off points exist for further investigation, e.g. the condensation pattern on the cups, or the variation of parameters (what if the ice was forced to the bottom of the cup? what’s the influence of the exact temperatures or the water depth, …?).

Introducing an element of surprise might generally be a good idea to spark interest and inquiry. Huber & Moore (2001) suggest using “discrepant events” (their example is dropping raisins in carbonated drinks, where they first sink to the bottom and then raise as gas bubbles attach to them, only to sink again when the bubbles break upon reaching the surface) to initiate discussions. They then suggest following up the observation of the discrepant event with a “can you think of a way to …?” question (i.e. make the raisin raise faster to the surface). The “can you think of a way to…?” question is followed by brainstorming of many different ideas. Later, students are asked “can you find a way to make it happen?”, which then means that they pick one of their ideas and design and conduct an experiment. Huber & Moore (2001) then suggest a last step, in which students are asked to do a graphical representation or of their results or some other product, and “defend” it to their peers.

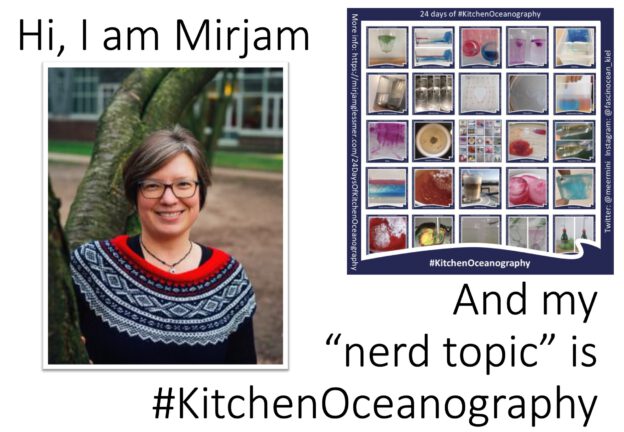

In contrast to how I run my favourite “melting ice cubes” experiment when I am instructing it in real time, I am using a lot of confirmation experiences, for example in my advent calendar “24 days of #KitchenOceanography”. How could they be re-imagined to lead to more investigation and less cook-book-style confirmation, especially when presented on a blog or social media? Ha, you would like to know, wouldn’t you? I’ve started working on that, but it’s not December yet, you will have to wait a little! :)

I’m also quite intrigued by the “product” that students are asked to produce after their experimentation, and by what would make a good type of product to ask for. In the recent iEarth teaching conversations, Torgny has been speaking of “tangible traces of learning” (in quotation marks which makes me think there is definitely more behind that term than I realize, but so far my brief literature search has been unsuccessful). But maybe that’s why I like blogging so much, because it makes me read articles all the way to the end, think a little more deeply about them, and put the thought into semi-cohesive words, thus giving me tangible proof of learning (that I can even google later to remind me what I thought at some point)? Then, maybe everybody should be allowed to find their own kind of product to produce, depending on what works best for them. On the other hand, for the iEarth teaching conversations, I really like the format of one page of text, maximum, because I really have to focus and edit it (not so much space for rambling on as on my blog, but a substantially higher time investment… ;-)). Also I think giving some kind of guidance is helpful, both to avoid students getting spoilt for choice, and to make sure they focus their time and energy on things that are helping the learning outcomes. Cutting videos for example might be a great skill to develop, but it might not be the one you want to develop in your course. Or maybe you do, or maybe the motivational effects of letting them choose are more important, in which case that’s great, too! One thing that we’ve done recently is to ask students to write blog or social media posts instead of classical lab reports and that worked out really well and seems to have motivated them a lot (check out Johanna Knauf’s brilliant comic!!!).

Kirsty also mentioned a second point regarding the Buck et al. (2008) rubric to keep in mind: it is just about what is provided by the teacher, not about the students’ role in all this. That’s an easy trap to fall into, and one that I don’t have any smart ideas about right now. And I am looking forward to discussing more thoughts on this, Kirsty :)

In any case, the rubric made me think about inquiry in labs in a new way, and that’s always a good thing! :)

—

Bartlett, P. A. and K. Dunnett (2019). Secret objectives: promoting inquiry and tackling preconceptions in teaching laboratories. arXiv:1905.07267v1 [physics.ed-ph]

Buck, L. B., Bretz, S. L., & Towns, M. H. (2008). Characterizing the level of inquiry in the undergraduate laboratory. Journal of college science teaching, 38(1), 52-58.

Huber, R.A., and C.J. Moore. 2001. A model for extending hands-on science to be inquiry based. School Science and Mathematics 101 (1): 32–41.