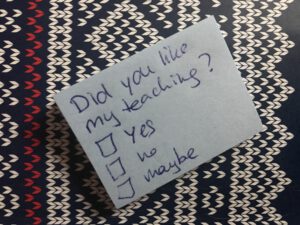

Student evaluations of teaching as a “technology of power” (reading Rodriguez, Rodriguez, & Freeman, 2020)

I just read this super interesting article about student evaluations of teaching as a “technology of power” that acts to prevent any change of the system from the status quo,…