Currently reading Stensaker & Matear (2025) on “Student involvement in quality assurance: Perspectives and practices towards persistent partnerships”

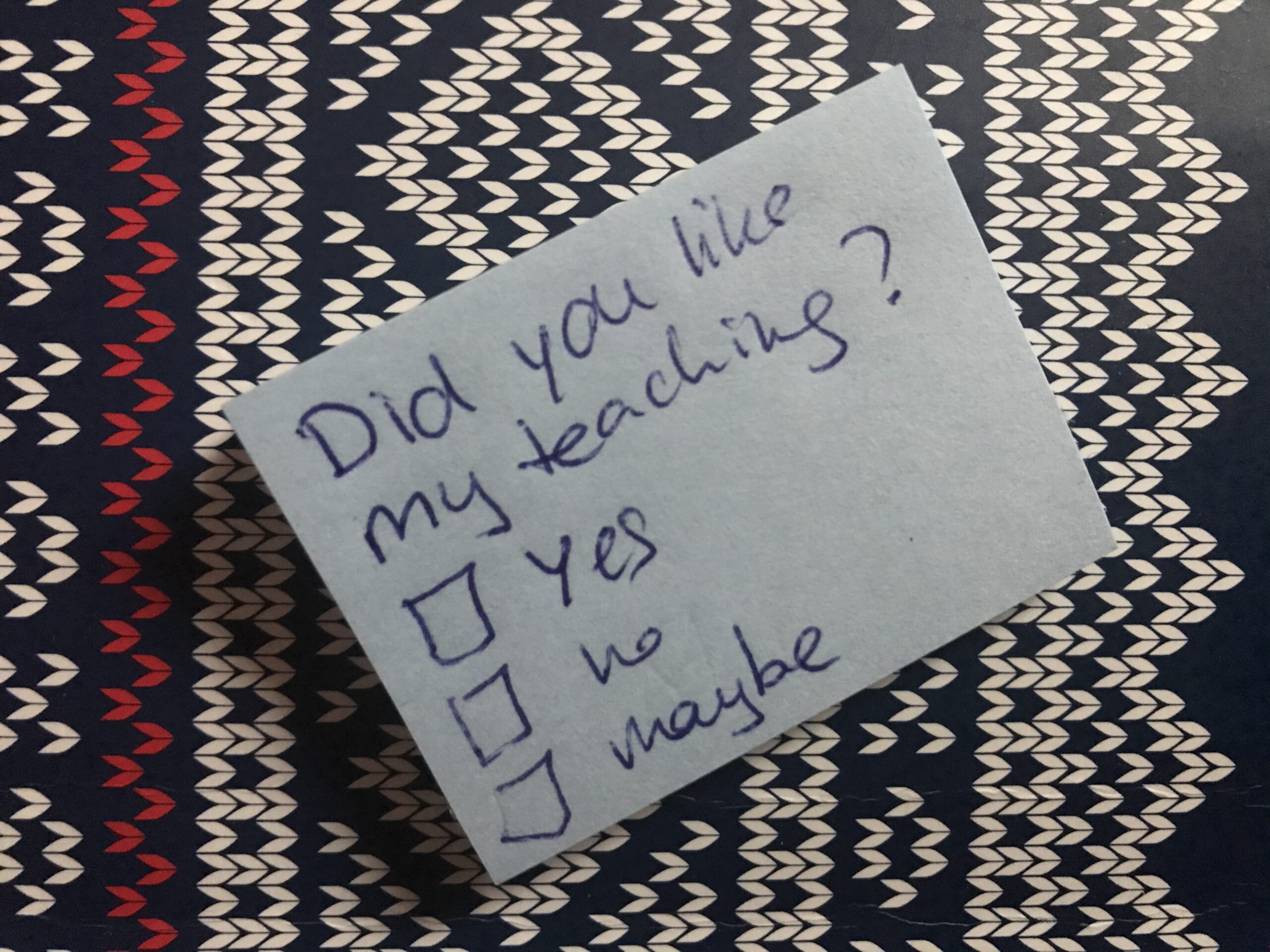

Including the student perspective in quality assurance of teaching is required by law in Sweden (and other places), and this means that they are asked to respond to surveys at the end of every course, have seats on all boards of education and others, and have strong, institutionalised student organisations. But of course there are […]