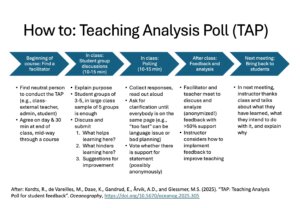

Published! Kordts et al. (2025) “TAP: Teaching Analysis Poll for student feedback”

“Teaching Analysis Polls” are a very useful method to get formative, constructive feedback from a whole class. An external facilitator comes in and structures an approximately 30 minute long discussion…