Published! “Superficially plausible outputs from a Black Box: problematising GenAI tools for analysing qualitative SoTL data” Glessmer & Forsyth (2025)

We had spent the last month reading, coding, discussing, re-coding, discussing some more, re-coding, discussing even more, and then consensus coding free-text answers of 449 students, and submitted the manuscript. “Just for fun, let’s plug it all into ChatGPT!” Rachel said. And so we did. And after 4 seconds, out came an analysis that looked almost identical to what we had painstakingly and carefully done over weeks and weeks and weeks. Wow! “But I am kind of curious where the differences come from. What did it see that we didn’t?” Mirjam asked. And that is how it all began.

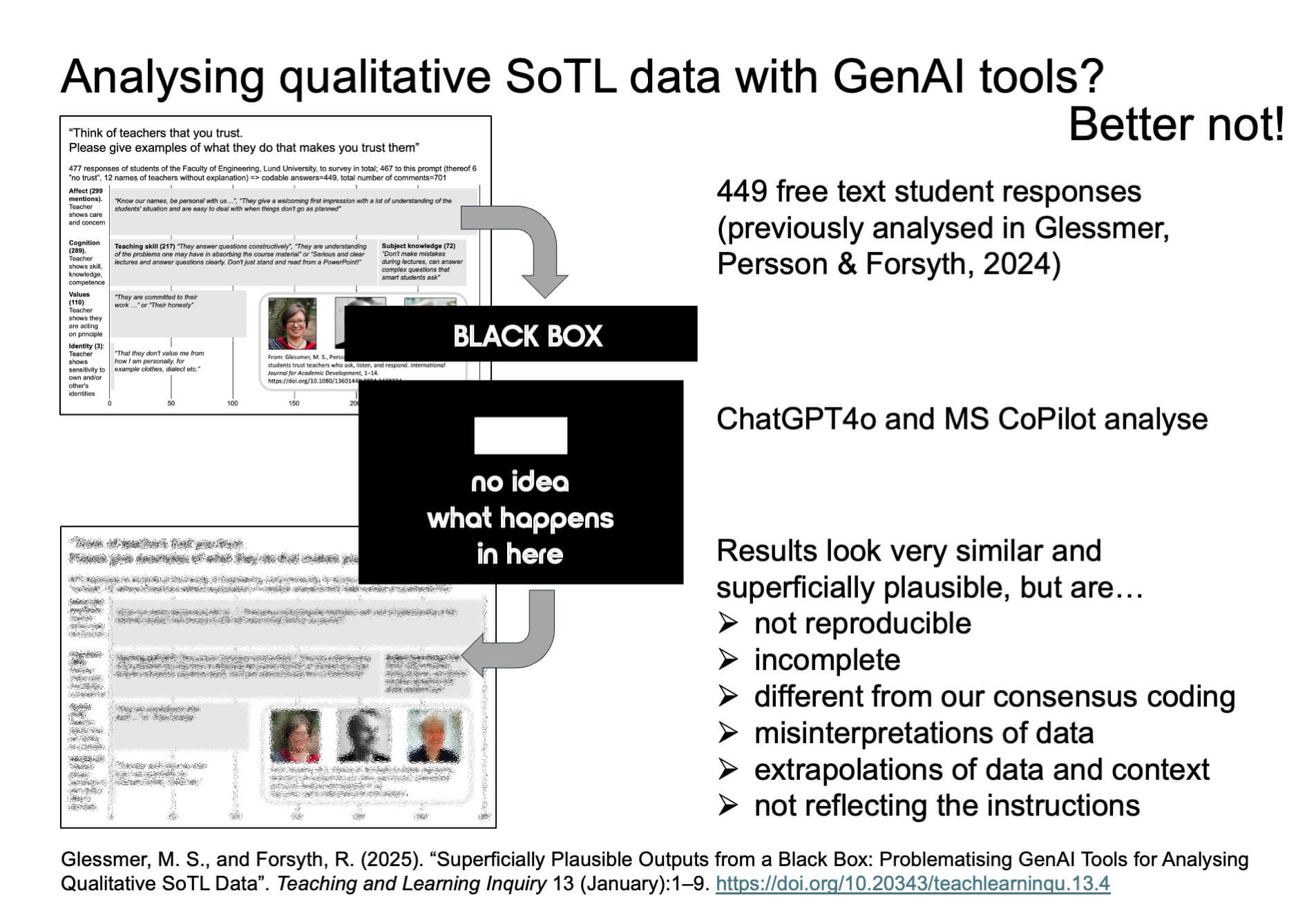

In the article “Superficially plausible outputs from a Black Box: problematising GenAI tools for analysing qualitative SoTL data”, Rachel Forsyth and myself report on what happened next. How we discovered, little by little, the problems with what we had thought was such a time saver. How, when we repeated the same prompt on the same data, the responses differed. How, when summarizing an interview, sometimes assumptions about the gender of speakers was made even though there was no indication at all in the text. How sometimes context was made up to expand on our data. Most problematically, how we cannot describe what is happening inside a Generative AI tool, and therefore it does not fulfil a basic requirement of a scientific analysis: That you can describe a method, and reproduce results.

For us as academic developers, this definitely answers a question that we are asked over and over again by teachers working on SoTL projects: Can I use GenAI on student responses to save time? And the answer is yes, of course you can, but you really should not. The answers you will get are not scientifically sound, they are a black box’s best guess of what would be said in the context. Also, you are not giving those student responses the attention and respect that they deserve and that you want to demonstrate as you build a trusting relationship conducive to learning. Would my answer be different if I was thinking about assessment tasks rather than research? Right now, and for the kind of topics I am teaching and the type of assessment questions I am asking, no. But of course there are also considerations about how much time to put into reading responses vs what the time could be spent on otherwise, especially for large student groups and for different subjects… So yes, we live in interesting times!

What the article does not include, and it really should have, is a problematization of GenAI beyond whether or not it is a valid tool for analyzing qualitative data. We should have also discussed environmental impact of running the server farms needed to train such a model and to process user requests, the intellectual property that was scraped off the internet without consent, the baseless, yet common, assumption of benevolence and neutrality of the training process. These alone might be reason enough to not use GenAI, even before finding out, like we did, that it is not fit for at least this specific purpose.

But, below is a picture of us working on this article. Yes, we had fun! Rachel is just the best person to work with!

Glessmer, M. S., and Forsyth, R. (2025). “Superficially Plausible Outputs from a Black Box: Problematising GenAI Tools for Analysing Qualitative SoTL Data”. Teaching and Learning Inquiry 13 (January):1–9. https://doi.org/10.20343/teachlearninqu.13.4